The AI Chip Wars: How NVIDIA, AMD, and Emerging Players Are Reshaping the Semiconductor Battlefield

The AI Chip Wars: How NVIDIA, AMD, and Emerging Players Are Reshaping the Semiconductor Battlefield

The artificial intelligence revolution isn’t just about algorithms and data—it’s fundamentally about the silicon that powers it all. As AI applications explode across industries, from autonomous vehicles to large language models, the demand for specialized processing power has created one of the most intense competitive battles in tech history: the AI chip wars.

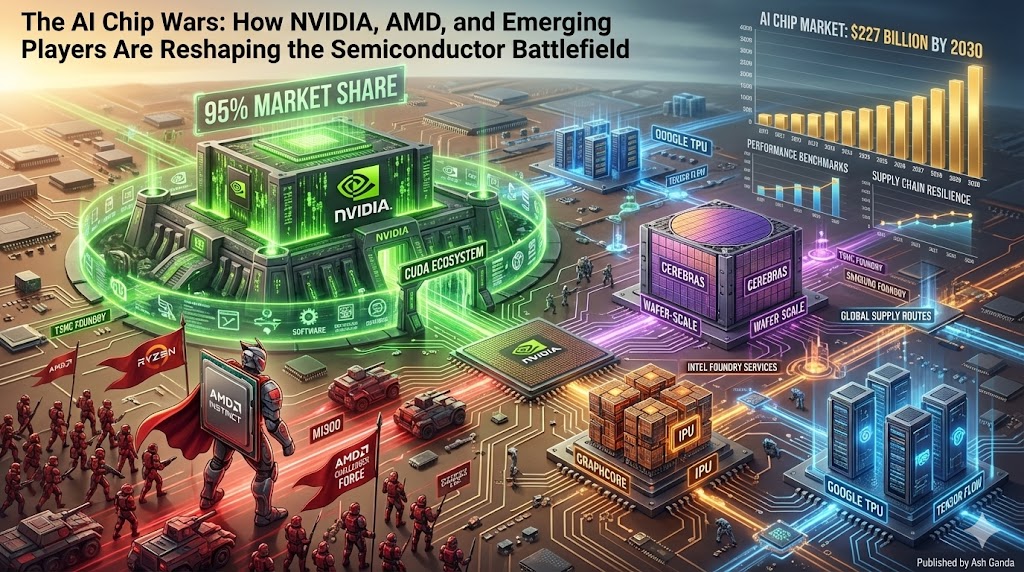

This isn’t just another technology skirmish. The stakes are enormous, with the global AI chip market projected to reach $227 billion by 2030. The companies that dominate this space won’t just profit—they’ll literally control the infrastructure that powers the future of human-computer interaction.

Let’s dive deep into how NVIDIA built its seemingly unassailable fortress, how AMD is mounting its counterattack, and why a wave of emerging challengers might reshape this battlefield entirely.

NVIDIA’s Stranglehold: From Gaming to AI Dominance

NVIDIA’s journey to AI chip supremacy reads like a masterclass in strategic positioning. Originally focused on graphics processing for gaming, NVIDIA made a prescient bet in the early 2000s when it started promoting its GPUs for general-purpose computing tasks—a technology they called CUDA.

This decision proved transformational when the AI boom hit. Unlike traditional CPUs designed for sequential processing, GPUs excel at parallel computation—exactly what AI workloads demand. When researchers needed to train deep learning models, NVIDIA’s chips were ready and waiting.

The CUDA Environment Advantage

NVIDIA’s true genius wasn’t just in hardware—it was in creating an entire environment. CUDA became the de facto programming language for AI development, with thousands of developers building expertise in the platform. This created powerful network effects: the more developers used CUDA, the more valuable NVIDIA’s platform became, which attracted even more developers.

Today, NVIDIA commands roughly 95% of the market for AI training chips. Their H100 and A100 GPUs have become the gold standard for everything from training ChatGPT to powering autonomous vehicle systems. Major cloud providers like Amazon, Google, and Microsoft compete fiercely to secure NVIDIA chip allocations.

The Software Moat

While competitors focus on matching NVIDIA’s hardware specs, they often underestimate the software advantage. NVIDIA’s software stack includes:

- CUDA runtime libraries optimized for AI workloads

- cuDNN for deep neural network primitives

- TensorRT for inference optimization

- Omniverse for collaborative 3D development

This comprehensive software environment means switching from NVIDIA isn’t just about buying different hardware—it often requires rewriting entire applications.

AMD’s Strategic Counteroffensive

AMD, long known as the scrappy underdog in the CPU wars against Intel, has set its sights on breaking NVIDIA’s AI chip monopoly. Their approach combines aggressive hardware development with a crucial strategic insight: the industry desperately wants an alternative to NVIDIA’s dominance.

The ROCm Revolution

AMD’s primary weapon is ROCm (Radeon Open Compute), an open-source platform designed to challenge CUDA’s hegemony. Unlike NVIDIA’s proprietary approach, ROCm embraces open standards, potentially appealing to companies wary of vendor lock-in.

Their MI300 series chips represent a significant technological leap, featuring:

- Unified memory architecture combining CPU and GPU memory

- Advanced packaging that integrates multiple chip types

- Competitive performance on AI training workloads

- Lower total cost of ownership compared to NVIDIA solutions

The OpenAI Partnership and Beyond

AMD’s strategy received a major boost when Microsoft chose AMD chips for some Azure AI workloads, and Meta began using MI300 processors for AI research. These high-profile deployments provide crucial real-world validation and help overcome the “nobody gets fired for buying NVIDIA” mentality.

The company is also betting heavily on inference workloads—the process of running trained AI models in production. While NVIDIA dominates training, the inference market is larger and potentially more accessible to competitors.

Challenges and Opportunities

AMD faces significant headwinds. Their software environment, while improving, still lags behind NVIDIA’s decade-plus head start. Many AI frameworks and tools are optimized for CUDA first, with AMD support added later (if at all).

However, AMD has advantages too. Their close relationship with major cloud providers through their CPU business provides distribution channels NVIDIA lacks. They’re also benefiting from geopolitical tensions, as some companies seek to diversify their chip suppliers.

The Emerging Challenger Environment

While NVIDIA and AMD battle for dominance, a fascinating environment of specialized challengers is emerging, each with unique approaches to the AI chip problem.

Custom Silicon Giants

Google’s TPU Strategy: Google’s Tensor Processing Units represent perhaps the most successful challenge to GPU dominance. Designed specifically for Google’s AI workloads, TPUs offer superior performance and efficiency for certain tasks. Google’s willingness to make TPUs available through Google Cloud has created a credible third option for many AI developers.

Amazon’s Graviton and Inferentia: Amazon has developed custom chips optimized for both general compute (Graviton) and AI inference (Inferentia/Trainium). By controlling both the hardware and cloud platform, Amazon can offer compelling price-performance advantages for AWS customers.

Apple’s Neural Engine: While focused on edge computing rather than data center workloads, Apple’s approach shows how custom silicon can deliver exceptional AI performance while maintaining energy efficiency—a crucial factor for mobile and embedded applications.

Startup Innovation Wave

A new generation of startups is attacking specific segments of the AI chip market:

Cerebras Systems has built the largest computer chip ever created, optimizing for AI training workloads with massive parallel processing capabilities.

Graphcore developed Intelligence Processing Units (IPUs) specifically designed for machine learning, with a unique architecture that handles sparse computations more efficiently than traditional GPUs.

SambaNova Systems focuses on dataflow architecture that can reconfigure itself for different AI workloads, potentially offering better efficiency than fixed-function processors.

The China Factor

Chinese companies like Biren Technology, Cambricon, and Horizon Robotics are developing AI chips partly in response to US export restrictions. While currently behind in absolute performance, rapid improvement and massive domestic investment make them potential long-term challengers.

Future Battlegrounds and Strategic Implications

The AI chip wars are far from over, and several key trends will shape the next phase of competition.

The Edge Computing Shift

As AI applications move from centralized data centers to edge devices—smartphones, autonomous vehicles, IoT sensors—the requirements change dramatically. Edge AI chips must balance performance with power efficiency, cost, and size constraints. This shift creates opportunities for new architectures and potentially undermines some of NVIDIA’s current advantages.

Software-Defined Silicon

The future may belong to chips that can reconfigure themselves for different workloads. Companies like Flex Logix and Achronix are developing programmable architectures that could offer ASIC-like performance with FPGA-like flexibility.

The Open Source Movement

RISC-V and other open-source chip designs are gaining momentum, potentially democratizing chip development. If successful, this could lead to an explosion of specialized AI processors designed for specific applications.

Geopolitical Complications

Trade tensions between the US and China are reshaping the global chip landscape. Export restrictions on advanced semiconductors are accelerating domestic chip development in multiple countries, potentially fragmenting what has historically been a global market.

Strategic Takeaways for the Tech Industry

The AI chip wars offer several crucial lessons for technology strategists:

Environment beats pure performance: NVIDIA’s dominance stems as much from CUDA’s ubiquity as from raw chip performance. Building comprehensive software ecosystems takes time but creates sustainable competitive advantages.

Timing matters enormously: NVIDIA’s early bet on general-purpose GPU computing positioned them perfectly for the AI boom. Companies that anticipate the next major computing shift will reap similar rewards.

Specialization is the future: As AI workloads become more diverse, specialized processors optimized for specific tasks (training vs. inference, computer vision vs. natural language processing) will likely outperform general-purpose solutions.

Software differentiation is crucial: In a world where chip manufacturing capabilities are limited to a few foundries, software becomes the key differentiator. The winners will be those who can provide complete, easy-to-use development environments.

Supply chain resilience is strategic: Recent chip shortages and geopolitical tensions have taught companies that relying on a single supplier—even a dominant one—carries significant risks.

The AI chip wars represent more than just a business competition—they’re a battle for the technological foundation of the next computing era. While NVIDIA currently holds the high ground, the rapid pace of innovation, massive market opportunities, and strategic importance of AI processing ensure this will remain one of the most dynamic and consequential battles in the technology industry.

As AI continues to transform every industry, the companies that control the chips powering this transformation will wield enormous influence over the digital future. The war is far from over, and the next chapter promises to be even more fascinating than the last.