Neuromorphic Computing: How Brain-Inspired Chips Are Revolutionizing AI Processing

Neuromorphic Computing: How Brain-Inspired Chips Are Revolutionizing AI Processing

Imagine a computer chip that processes information the way your brain does—consuming minimal power while handling complex tasks in real-time. This isn’t science fiction; it’s neuromorphic computing, and it’s poised to transform everything from autonomous vehicles to edge AI devices.

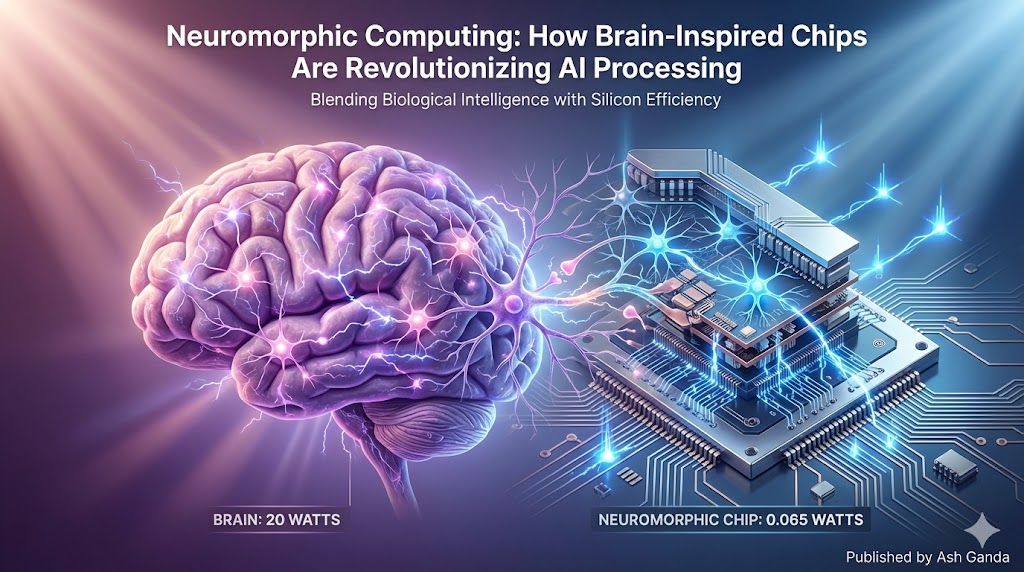

While traditional processors excel at sequential calculations, they struggle with the parallel, adaptive processing that makes biological brains so remarkably efficient. A human brain consumes roughly 20 watts of power—about as much as a light bulb—yet outperforms supercomputers in pattern recognition, learning, and decision-making. Neuromorphic computing aims to bridge this gap by fundamentally reimagining how we design and build computational systems.

As AI workloads become increasingly complex and energy consumption concerns mount, the tech industry is racing to develop chips that think more like brains and less like calculators. Let’s explore why this shift matters and what it means for the future of computing.

The Architecture Revolution: From Von Neumann to Neural Networks

Traditional computers follow the Von Neumann architecture, where processing units and memory are separate, connected by a limited-bandwidth bus. This creates a “memory wall” bottleneck—processors spend more time waiting for data than actually computing. It’s like having a brilliant chef who must constantly run to a distant pantry for each ingredient.

Neuromorphic chips break this paradigm by integrating memory and processing at the same location, mimicking how neurons and synapses work together. In biological brains, each synapse acts as both a memory storage unit and a processing element, enabling massively parallel computation with minimal energy transfer.

Consider Intel’s Loihi chip, which contains 130,000 artificial neurons and 130 million synapses. Unlike traditional processors that execute instructions sequentially, Loihi processes information asynchronously—neurons fire only when they receive sufficient input, dramatically reducing power consumption. This event-driven approach mirrors how your brain processes sensory information, activating only relevant neural pathways.

The implications are profound. Where a GPU might consume hundreds of watts training a neural network, neuromorphic chips can perform similar tasks using milliwatts. This efficiency gain isn’t marginal—it’s orders of magnitude better, opening possibilities for AI processing in battery-powered devices that were previously impossible.

Energy Efficiency: The Power of Thinking Small

The energy efficiency of neuromorphic computing stems from several key innovations that directly mirror biological neural networks. Traditional digital computers operate on a clock-driven system, where billions of transistors switch on and off at regular intervals regardless of whether they’re needed. It’s like keeping every light in a city on 24/7, whether anyone’s home or not.

Biological brains use sparse coding—only about 1% of neurons are active at any given moment. Neuromorphic chips replicate this efficiency through event-driven processing. Artificial neurons remain dormant until they receive meaningful input, then fire once and return to their low-power state. This approach, called “spiking neural networks,” can reduce energy consumption by up to 1000x compared to traditional AI accelerators.

IBM’s TrueNorth chip exemplifies this efficiency. With 1 million programmable neurons and 256 million synapses, it consumes just 65 milliwatts during operation—roughly equivalent to a hearing aid battery. Yet it can process complex visual recognition tasks in real-time, making it ideal for always-on applications like security cameras or autonomous drones.

The energy advantages compound when considering data movement. Traditional AI systems shuttle massive amounts of data between processors and memory, consuming significant power. Neuromorphic architectures eliminate much of this movement by processing data where it’s stored, similar to how your brain doesn’t separate thinking from remembering.

Real-World Applications: Where Brain-Inspired Computing Shines

Neuromorphic computing isn’t just a laboratory curiosity—it’s already solving real problems across multiple industries. The technology excels in scenarios requiring real-time processing, low power consumption, and adaptive learning.

Autonomous Systems: Self-driving cars generate terabytes of sensor data daily, requiring instant processing to make split-second decisions. Traditional AI systems struggle with this real-time demand while maintaining reasonable power consumption. Mercedes-Benz is experimenting with neuromorphic vision systems that can detect obstacles and pedestrians while consuming a fraction of the power required by conventional computer vision systems.

Edge AI Devices: Smart cameras, IoT sensors, and wearable devices need AI capabilities without draining batteries. Prophesee’s neuromorphic vision sensors can track objects and detect motion while consuming 1000x less power than traditional camera systems. These chips excel at processing dynamic scenes, making them perfect for surveillance systems that only activate when detecting movement.

Robotics: Boston Dynamics and other robotics companies are exploring neuromorphic processors for real-time motor control and balance. Unlike traditional control systems that require extensive pre-programming, neuromorphic chips can adapt to unexpected situations—much like how you instinctively adjust when stepping on uneven ground.

Medical Devices: Cochlear implants and neural prosthetics benefit enormously from neuromorphic processing. These devices must interpret neural signals in real-time while operating on minimal battery power. Neuromorphic chips can process complex signal patterns while lasting years on a single battery charge.

Financial Trading: High-frequency trading firms are investigating neuromorphic systems for pattern recognition in market data. These chips can identify subtle market trends and anomalies in real-time, potentially providing competitive advantages in microsecond-sensitive trading environments.

Industry Investment and Market Momentum

The neuromorphic computing market is attracting unprecedented investment from tech giants, governments, and venture capitalists. Intel has invested over $100 million in neuromorphic research, while IBM’s investment in brain-inspired computing spans more than a decade.

The European Union’s Human Brain Project allocated €1 billion toward understanding brain function and developing neuromorphic technologies. Similarly, the U.S. BRAIN Initiative has funded numerous neuromorphic research projects, recognizing their potential for both computing and neuroscience advancement.

Startup activity is equally impressive. BrainChip’s Akida processor targets edge AI applications, while SynSense focuses on always-on sensory processing. These companies aren’t just building better chips—they’re creating entirely new computing paradigms that could reshape how we think about artificial intelligence.

Major cloud providers are beginning to offer neuromorphic computing resources. Amazon Web Services recently announced partnerships with neuromorphic chip companies, allowing developers to experiment with brain-inspired algorithms without investing in specialized hardware.

The market momentum extends beyond pure-play neuromorphic companies. Traditional semiconductor giants like Qualcomm, Samsung, and TSMC are developing neuromorphic capabilities, recognizing that future AI applications will demand fundamentally different processing approaches.

Challenges and Future Outlook

Despite promising developments, neuromorphic computing faces significant challenges. Programming these systems requires new software frameworks and development tools. Traditional programming languages and algorithms don’t translate directly to neuromorphic architectures, creating a skills gap that the industry must address.

Standardization remains another hurdle. Unlike traditional processors with established programming models, neuromorphic chips vary significantly in architecture and capabilities. This fragmentation makes it difficult for developers to create portable applications across different neuromorphic platforms.

Manufacturing scalability presents additional challenges. While prototype neuromorphic chips show impressive capabilities, mass production at competitive costs requires new fabrication techniques and materials. The industry is still learning how to manufacture analog and digital components on the same chip reliably.

However, the trajectory is clear. As AI workloads continue growing and energy efficiency becomes paramount, neuromorphic computing offers a compelling path forward. The next five years will likely see neuromorphic processors move from research labs to commercial products, starting with specialized applications before expanding to mainstream computing.

The convergence of AI, edge computing, and sustainability concerns creates perfect conditions for neuromorphic adoption. Companies that master brain-inspired computing early will have significant advantages in the AI-driven economy.

Conclusion: The Dawn of Brain-Inspired Computing

Neuromorphic computing represents more than just another chip architecture—it’s a fundamental reimagining of how computers process information. By mimicking the brain’s efficient, parallel processing approach, these systems promise to make AI more accessible, sustainable, and powerful.

The technology’s impact will extend far beyond traditional computing applications. From enabling AI in battery-powered devices to processing vast sensor networks with minimal energy, neuromorphic chips will unlock capabilities that current architectures simply cannot achieve.

For technology leaders and strategists, the message is clear: neuromorphic computing isn’t a distant possibility—it’s an emerging reality that demands attention today. Companies that understand and prepare for this shift will be positioned to capitalize on the next wave of AI innovation.

The brain took millions of years to evolve its remarkable efficiency. Neuromorphic computing lets us capture that evolutionary advantage in silicon, creating a future where artificial intelligence is not just smart, but sustainably so. The question isn’t whether brain-inspired chips will transform computing—it’s how quickly we can adapt to this neural revolution.