The Future of AI Regulation: How Global Frameworks Are Reshaping Tech Strategy in 2024

The Future of AI Regulation: How Global Frameworks Are Reshaping Tech Strategy in 2024

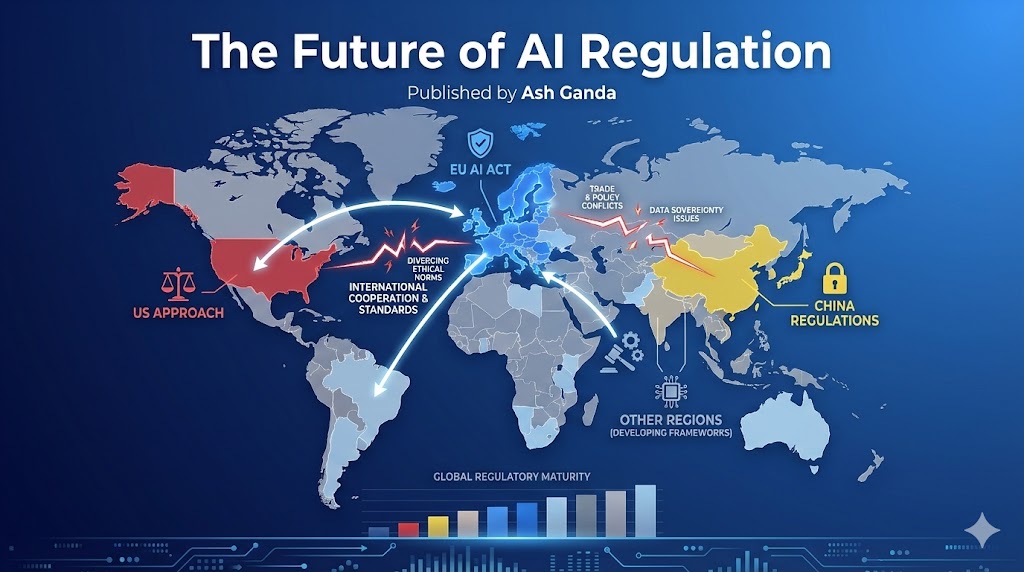

Artificial intelligence has reached an inflection point where its transformative potential is matched only by the urgent need for comprehensive regulation. As AI systems become increasingly sophisticated and ubiquitous, governments worldwide are racing to establish frameworks that balance innovation with safety, privacy, and ethical considerations.

The regulatory field is evolving at breakneck speed, with major jurisdictions taking distinctly different approaches. For tech leaders and strategists, understanding these emerging frameworks isn’t just about compliance—it’s about positioning for competitive advantage in an increasingly regulated market.

This comprehensive analysis examines the key regulatory developments shaping AI’s future, their implications for businesses, and how organizations can handle this complex field while maintaining their innovation edge.

The European Union: Leading with the AI Act

The European Union has positioned itself as the global frontrunner in AI regulation with its landmark AI Act, which came into force in August 2024. This comprehensive legislation establishes a risk-based approach that categorizes AI systems into four distinct categories: minimal risk, limited risk, high risk, and unacceptable risk.

The EU’s approach is particularly noteworthy for its extraterritorial reach. Any AI system that affects EU citizens—regardless of where it’s developed or deployed—falls under the Act’s jurisdiction. This creates a “Brussels Effect” similar to GDPR, where European standards become de facto global standards.

High-risk AI applications under the Act include:

- Biometric identification systems

- AI used in critical infrastructure

- Educational and vocational training systems

- Employment and worker management tools

- Essential private and public services

- Law enforcement systems

- Migration and border control management

- Administration of justice and democratic processes

For these systems, organizations must use rigorous risk management systems, ensure high levels of accuracy and robustness, maintain detailed logs, and provide clear information to users. The penalties are substantial—up to €35 million or 7% of global annual turnover, whichever is higher.

Meanwhile, prohibited AI practices include:

- Subliminal techniques beyond consciousness

- Exploiting vulnerabilities of specific groups

- Social scoring by public authorities

- Real-time biometric identification in public spaces (with limited exceptions)

The Act also introduces specific obligations for foundation models with systemic risk—those trained with computing power exceeding 10^25 FLOPs. These “frontier” models must undergo additional evaluations, use cybersecurity measures, and report serious incidents to the European Commission.

United States: A Sectoral and Executive-Led Approach

The United States has taken a markedly different approach, favoring sectoral regulation and executive action over comprehensive legislation. President Biden’s Executive Order on AI, issued in October 2023, represents the most significant federal AI policy initiative to date.

The executive order focuses heavily on safety and security, requiring developers of the most powerful AI systems to share safety test results with the government before public release. It also establishes new standards for AI safety and security, protects privacy, advances equity and civil rights, and promotes innovation and competition.

Key provisions include:

- Mandatory reporting for AI systems trained with more than 10^26 integer or floating-point operations

- Development of standards and tools for AI safety, security, and trustworthiness

- Enhanced oversight of AI use in critical infrastructure

- Measures to prevent AI-enabled fraud and deception

- Guidelines for government procurement and use of AI systems

Congress has been slower to act on comprehensive AI legislation, though several bills are under consideration. The ALGORITHMIC Accountability Act would require impact assessments for high-risk automated systems, while the CREATE AI Act proposes establishing a national AI research infrastructure.

Sectoral regulators are also stepping up. The FTC has issued guidance on AI and algorithms, the FDA is developing frameworks for AI/ML-based medical devices, and the EEOC has published technical assistance documents on AI use in employment decisions.

China: Balancing Innovation with Control

China’s approach to AI regulation reflects its broader philosophy of state-directed technological development. The country has implemented several targeted regulations rather than a single comprehensive framework.

The Algorithmic Recommendation Management Provisions, effective since March 2022, require transparency in algorithmic recommendation services and give users the right to turn off algorithmic recommendations. The Deep Synthesis Provisions, implemented in January 2023, regulate AI-generated content and require clear labeling of synthetically generated media.

More significantly, China’s Draft Measures for the Management of Algorithmic-Generated Content Services propose extensive requirements for generative AI services offered to the public, including:

- Pre-approval from relevant authorities before public release

- Content filtering to ensure outputs align with “socialist core values”

- Regular security assessments and risk evaluations

- Detailed record-keeping of training data and model parameters

China’s regulatory approach serves dual purposes: ensuring AI development aligns with state objectives while maintaining the country’s competitive edge in AI research and deployment. The government continues to invest heavily in AI through initiatives like the “New Infrastructure” plan, which includes AI as a core component.

Emerging Frameworks: Singapore, UK, and Beyond

Other jurisdictions are developing their own approaches, often trying to balance the EU’s comprehensive regulatory model with the US’s innovation-friendly stance.

Singapore has adopted a pragmatic, sector-agnostic approach with its Model AI Governance Framework. Rather than binding legislation, Singapore provides voluntary guidance that organizations can adapt to their specific contexts. The framework emphasizes self-regulation while establishing clear expectations for responsible AI deployment.

The United Kingdom initially pursued a principles-based approach, assigning AI oversight to existing regulators rather than creating new institutions. However, following rapid developments in generative AI, the UK has begun considering more prescriptive measures. The government’s AI White Paper, published in March 2023, outlines five key principles:

- Human oversight and decision-making

- Transparency and explainability

- Fairness and non-discrimination

- Safety and security

- Accountability and governance

Canada is advancing the Artificial Intelligence and Data Act (AIDA) as part of its broader Digital Charter Implementation Act. AIDA would regulate AI systems based on their potential impact, with stricter requirements for high-impact systems.

Japan continues to emphasize soft law approaches and international cooperation, hosting AI governance discussions through forums like the G7 and proposing the “AI Governance Guidelines” that focus on human-centric AI principles.

Strategic Implications for Organizations

The emergence of these diverse regulatory frameworks creates both challenges and opportunities for organizations deploying AI systems. The key strategic implications include:

Compliance Complexity: Organizations operating globally must handle multiple, sometimes conflicting regulatory requirements. The EU’s AI Act alone requires significant compliance infrastructure, while US sectoral regulations add additional layers of complexity.

Competitive Differentiation: Early movers in AI governance and compliance can differentiate themselves in the market. Organizations that build trust through transparent, responsible AI practices may gain competitive advantages as regulatory requirements tighten.

Innovation Constraints vs. Opportunities: While regulation may slow some aspects of AI development, it also creates opportunities for companies that specialize in AI safety, explainability, and compliance technologies.

Global Standardization Pressure: Despite regulatory fragmentation, market forces are pushing toward convergence on certain standards. Organizations that align with the most stringent requirements (often European) may find it easier to operate globally.

Investment in AI Governance: Regulatory compliance requires significant investment in AI governance infrastructure, including risk management systems, documentation processes, and technical capabilities for model testing and monitoring.

Preparing for the Regulated AI Future

As regulatory frameworks solidify, organizations should take proactive steps to prepare:

-

Conduct AI Risk Assessments: Use comprehensive risk assessment processes that align with emerging regulatory requirements, particularly for high-risk applications.

-

Invest in Explainable AI: Develop capabilities to explain AI decision-making processes, as transparency requirements are common across jurisdictions.

-

Build Compliance Infrastructure: Establish systems for documentation, monitoring, and reporting that can scale across multiple regulatory frameworks.

-

Engage with Policymakers: Participate in regulatory consultations and industry discussions to help shape emerging frameworks.

-

Develop Cross-Functional Teams: Create teams that combine technical AI expertise with legal, compliance, and policy knowledge.

-

Monitor Regulatory Developments: Establish processes to track regulatory changes across all relevant jurisdictions.

The future of AI regulation is not a distant concern—it’s happening now, and its effects are already being felt across the industry. Organizations that proactively address these regulatory challenges while maintaining their innovation capabilities will be best positioned to thrive in the regulated AI field of tomorrow.

The next few years will be critical in determining how these frameworks evolve and interact. As AI technology continues to advance and regulatory frameworks mature, we can expect further convergence on core principles while maintaining some regional variation in implementation. The organizations that successfully handle this complex field will be those that view regulation not as a constraint, but as a catalyst for building more trustworthy, robust, and ultimately more valuable AI systems.